Who is responsible in an accident involving a self-driving car?

Research centre RobotRecht (“RobotLaw”) in Würzburg was specificaly set up to deal with techno-legal questions regarding self-driving

amendment of an original article by Goethe Institut

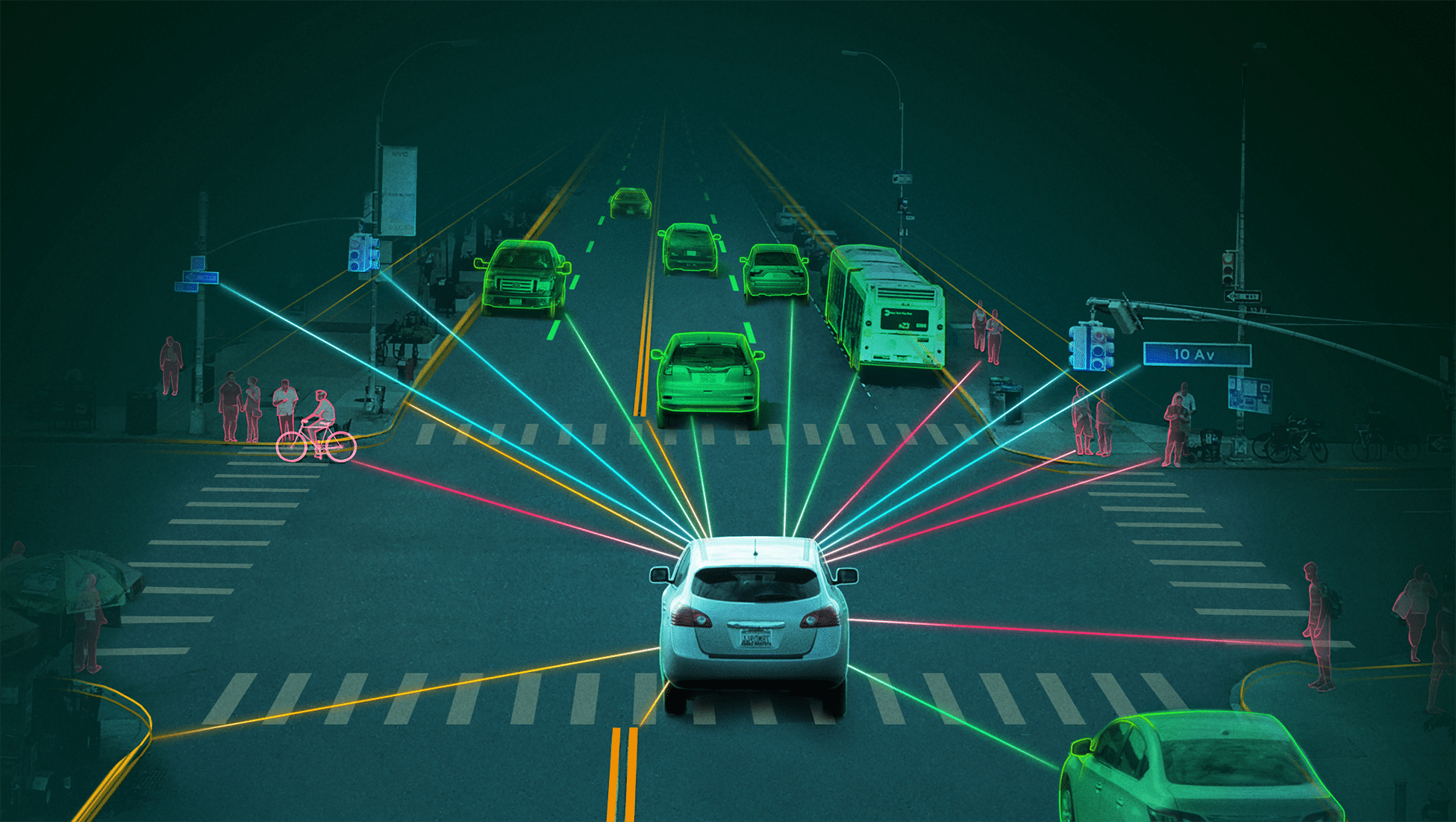

According to Ptolomus Consulting Group, the first strategy consulting & market research firm entirely focused on augmented mobility and automation, by 2030 there will be more cars on the global road system with ADAS (Advanced Driver-Assistance System) than without it and by that time 370 millions vehicles will have some automated features.

Which aspects of a vehicle would be affected by automation? And what does that involve in an autonomous vehicle?

Those are among the questions that a team – the Forschungsstelle RobotRecht (Robotics Law Research Centre) – set up in Wursburg, in Germany, is trying to answer.

RobotRecht was established in mid-2010 at the Chair of Criminal Law, Criminal Justice, Legal Theory, Information Law and Legal Informatics at the Faculty of Law, University of Würzburg. The multi-member team has faced various legal challenges posed by technological developments in the field of autonomous systems. This has ranged from driverless motor vehicles to industrial or household robots that work cooperatively with human beings, all the way to new forms of artificial intelligence software.

Below, insights by Max Tauschhuber, research associate at RobotRecht.

What ‘autonomous’ means

It’s important to use the terminology carefully. For vehicles, we distinguish between multiple levels. In 2021, the German Federal Highway Research Institute introduced a new categorisation that comprises three levels.

The first level is the assisted mode. Drivers are supported in carrying out certain driving tasks – but they still need to permanently monitor the system and their surroundings. This includes cruise control and lane keeping assistants.

Then there’s the automated mode. In this mode, drivers can undertake activities unrelated to driving while the system drives the vehicle. They could read something, for example. However, drivers must remain sufficiently alert to take over the task of driving again in a timely manner when prompted by the system.

The third mode is the autonomous mode. Only the system drives the vehicle. Any humans on board are just passengers. Buses that operate in autonomous mode are also called shuttles. It’s conceivable that these vehicles might no longer have a steering wheel or other controls.

Legal considerations and autonomous vehicle

With regard to fundamental questions that can’t be described through norms, the automotive industry has an interest in having them resolved academically. Ideally, this is done before a vehicle gets onto the road at all. Companies often do this through research co-operations – that’s where we partly get our funding – or PhD projects.

“You cannot expect anyone to know at what point the system will make a mistake”

What kind of liability?

In case of an accident with an assisted, automated or autonomous vehicle, who is responsible if the accident was caused by a system decision?

There are two sides to consider if you try to solve it legally.

Firstly, in road traffic, we have so-called strict liability which can apply regardless of fault. You are liable for bringing a permitted hazard – a vehicle – into the public sphere.

However, there is also fault-based liability. In a sense, this is the opposite of strict liability. You need to prove that the defendant acted at least negligently.

This shows that the use of autonomous systems in road traffic will become more important in the future because mistakes made by the system aren’t foreseeable. You cannot expect anyone to know at what point the system will make a mistake. That’s why there will likely be a shift away from fault-based liability towards strict liability and even more so towards a vehicle manufacturer’s liability.

Criminal liability is harder to determine. In criminal law, we have the so-called fault principle. This means you have to prove in each instance that the perpetrator is personally at fault. In criminal law, fault means intent or negligence. In road traffic, you’ll rarely be able to assume intent. If you can, though, the case is straightforward.

It’s primarily negligence where things get complicated. Imagine: while parking with a parking assistance system, a child that is playing in the parking area is injured because the vehicle’s sensors were dirty and the vehicle didn’t see the child. Acting negligently means disregarding the due diligence that is required in traffic. That’s the basic definition.

Now you could argue that the parking assistant is designed for self-parking so that the driver doesn’t need to pay attention to anything after the parking assistant has been activated. However, an anticipatory driver would assume the possibility of dirt – and the possibility of children playing in the parking lot. Thus, the fact that an autonomous technical system is used does not a priori exclude the user of the system from any criminal liability.

Accident with a completely autonomous vehicle

This has become more complicated as well. For example, how a vehicle should deal with decisions that affect lives or physical integrity is now regulated by law.

One prerequisite is that a motor vehicle incorporates a system for accident avoidance, meaning it should be able to avoid and reduce harm. Here’s the interesting bit: when the system recognises a situation in which a violation of legally protected rights – such as physical integrity or even life – is unavoidable, the system must be able to identify independently which legally protected right it should prioritise. Problems like this are ethical dilemmas.

If there are two alternative unavoidable harms, the vehicle’s system should automatically attribute the highest priority to the protection of human life. Thus, if the vehicle has a choice between injuring or killing a human being, it should injure a human being.

We can take it further from there. What if a human life is at stake in both alternatives? In these cases, the law dictates that the system must not allow for any prioritisation of individual attributes. We cannot have a situation where a vehicle detects people’s ages and automatically kills the older person.

However, systems also shouldn’t evaluate quantitatively. They shouldn’t decide between 100 human lives and one human life. That is the big ethical discussion in such cases. It has been going on for thousands of years – before autonomous vehicles even existed. Now vehicles are supposed to be able to do it, but for the time being, that probably won’t be technically feasible.

source: Goethe Institut I Universitat Wurzburg

cover image: Ptolemus Consulting Group

author: Barbara Marcotulli

Maker Faire Rome – The European Edition has been committed since its very first edition to make innovation accessible, usable and profitable for all. This blog is always updated and full of opportunities and inspiration for makers, makers, SMEs and all the curious ones who wish to enrich their knowledge and expand their business, in Italy, in Europe and beyond.

Subscribe our newsletter: we will select and share the most valuable information for yo